LoRA Land🎢

Fine-tuned LLMs that outperform GPT-4, served on a single GPU

PROMPT Enter prompt input(s) and click “Run” to generate a response from the fine-tuned LLM and base model.

FINE-TUNED MODEL RESPONSE

BASE MODEL RESPONSE Mistral 7B Instruct

Built on Open Source Foundations

Fine-Tuned On Managed Infra

State-of-the-art fine-tuning techniques such as quantization, low-rank adaptation, and memory-efficient distributed training combined with right-sized compute to ensure your jobs are successfully trained as efficiently as possible.

Cost-Efficient Inference

Stop wasting time managing distributed clusters–get fully managed, optimized compute configured for your needs without all the time and cost.

Learn More

LoRA Land: Fine-Tuned Open-Source LLMs that Outperform GPT-4

LoRA Land is a collection of 25+ fine-tuned Mistral-7b models that outperform GPT-4 in task-specific applications. This collection of fine-tuned OSS models offers a blueprint for teams seeking to efficiently and cost-effectively deploy AI systems.

Introducing the first purely serverless solution for fine-tuned LLMs

Serverless Fine-tuned Endpoints allow users to query their fine-tuned LLMs without spinning up a dedicated GPU deployment. Only pay for what you use, not for idle GPUs. Try it today with Predibase’s free trial!

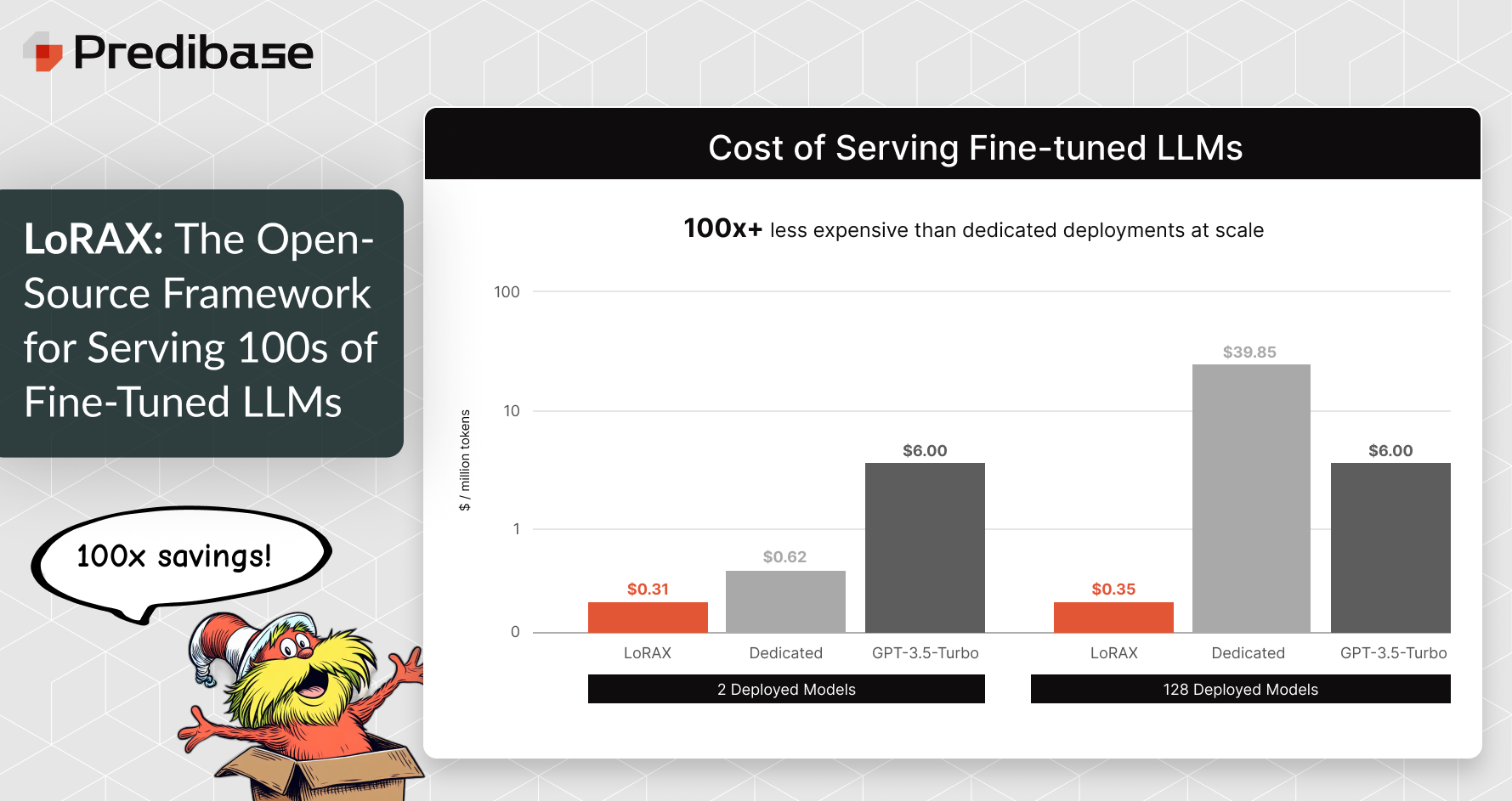

LoRAX: The Open Source Framework for Serving 100s of Fine-Tuned LLMs in Production

We recently announced LoRA Exchange (LoRAX), a framework that makes it possible to serve 100s of fine-tuned LLMs at the cost of one GPU with minimal degradation in throughput and latency. Today, we’re excited to release LoRAX to the open-source community.